- #MILKYTRACKER IMPORT FINE TUNE INSTALL#

- #MILKYTRACKER IMPORT FINE TUNE ARCHIVE#

- #MILKYTRACKER IMPORT FINE TUNE CODE#

#MILKYTRACKER IMPORT FINE TUNE CODE#

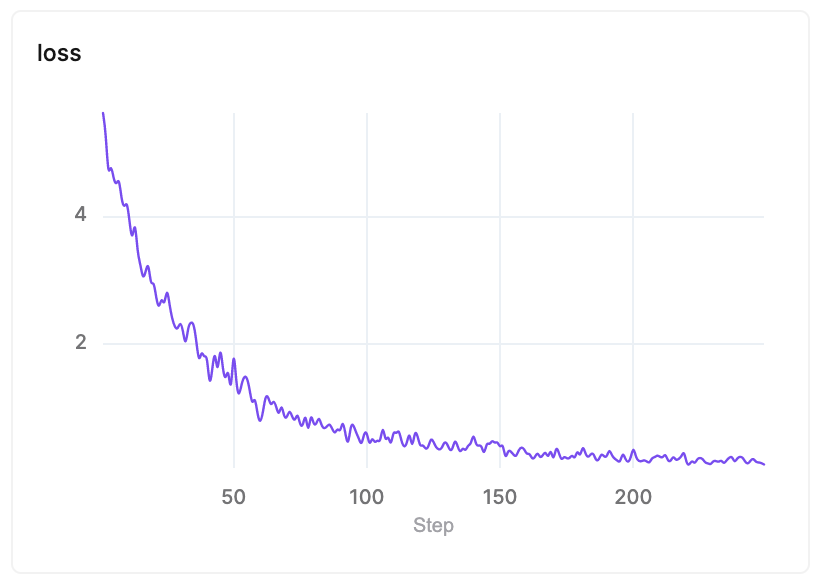

Note: The example code in this Notebook is a commented and expanded version of the short example provided in the transformers documentation here. However,you may find that the below “fine-tuned-on-squad” model already does a good job, even if your text is from a different domain. See run_squad.py in the transformers library. If you do want to fine-tune on your own dataset, it is possible to fine-tune BERT for question answering yourself. In the example code below, we’ll be downloading a model that’s already been fine-tuned for question answering, and try it out on our own text. We repeat this process for the end token–we have a separate weight vector this. Whichever word has the highest probability of being the start token is the one that we pick. The start token classifier only has a single set of weights (represented by the blue “start” rectangle in the above illustration) which it applies to every word.Īfter taking the dot product between the output embeddings and the ‘start’ weights, we apply the softmax activation to produce a probability distribution over all of the words. These are simply two embeddings (for segments “A” and “B”) that BERT learned, and which it adds to the token embeddings before feeding them into the input layer.īERT needs to highlight a “span” of text containing the answer–this is represented as simply predicting which token marks the start of the answer, and which token marks the end.įor every token in the text, we feed its final embedding into the start token classifier. The two pieces of text are separated by the special token.īERT also uses “Segment Embeddings” to differentiate the question from the reference text. To feed a QA task into BERT, we pack both the question and the reference text into the input. The SQuAD homepage has a fantastic tool for exploring the questions and reference text for this dataset, and even shows the predictions made by top-performing models.įor example, here are some interesting examples on the topic of Super Bowl 50. Given a question, and a passage of text containing the answer, BERT needs to highlight the “span” of text corresponding to the correct answer. The task posed by the SQuAD benchmark is a little different than you might think. When someone mentions “Question Answering” as an application of BERT, what they are really referring to is applying BERT to the Stanford Question Answering Dataset (SQuAD).

#MILKYTRACKER IMPORT FINE TUNE INSTALL#

Install huggingface transformers libraryīy Chris McCormick Part 1: How BERT is applied to Question Answering The SQuAD v1.1 Benchmark

In this Notebook, we’ll do exactly that, and see that it performs well on text that wasn’t in the SQuAD dataset. For question answering, however, it seems like you may be able to get decent results using a model that’s already been fine-tuned on the SQuAD benchmark. Part 2 contains example code–we’ll be downloading a model that’s already been fine-tuned for question answering, and try it out on our own text!įor something like text classification, you definitely want to fine-tune BERT on your own dataset. In Part 1 of this post / notebook, I’ll explain what it really means to apply BERT to QA, and illustrate the details. What does it mean for BERT to achieve “human-level performance on Question Answering”? Is BERT the greatest search engine ever, able to find the answer to any question we pose it?

#MILKYTRACKER IMPORT FINE TUNE ARCHIVE#

Chris McCormick About Membership Blog Archive Become an NLP expert with videos & code for BERT and beyond → Join NLP Basecamp now! Question Answering with a Fine-Tuned BERT

0 kommentar(er)

0 kommentar(er)